Loveneet singh

Java Developer Roadmap [2025] - Become A Java Developer

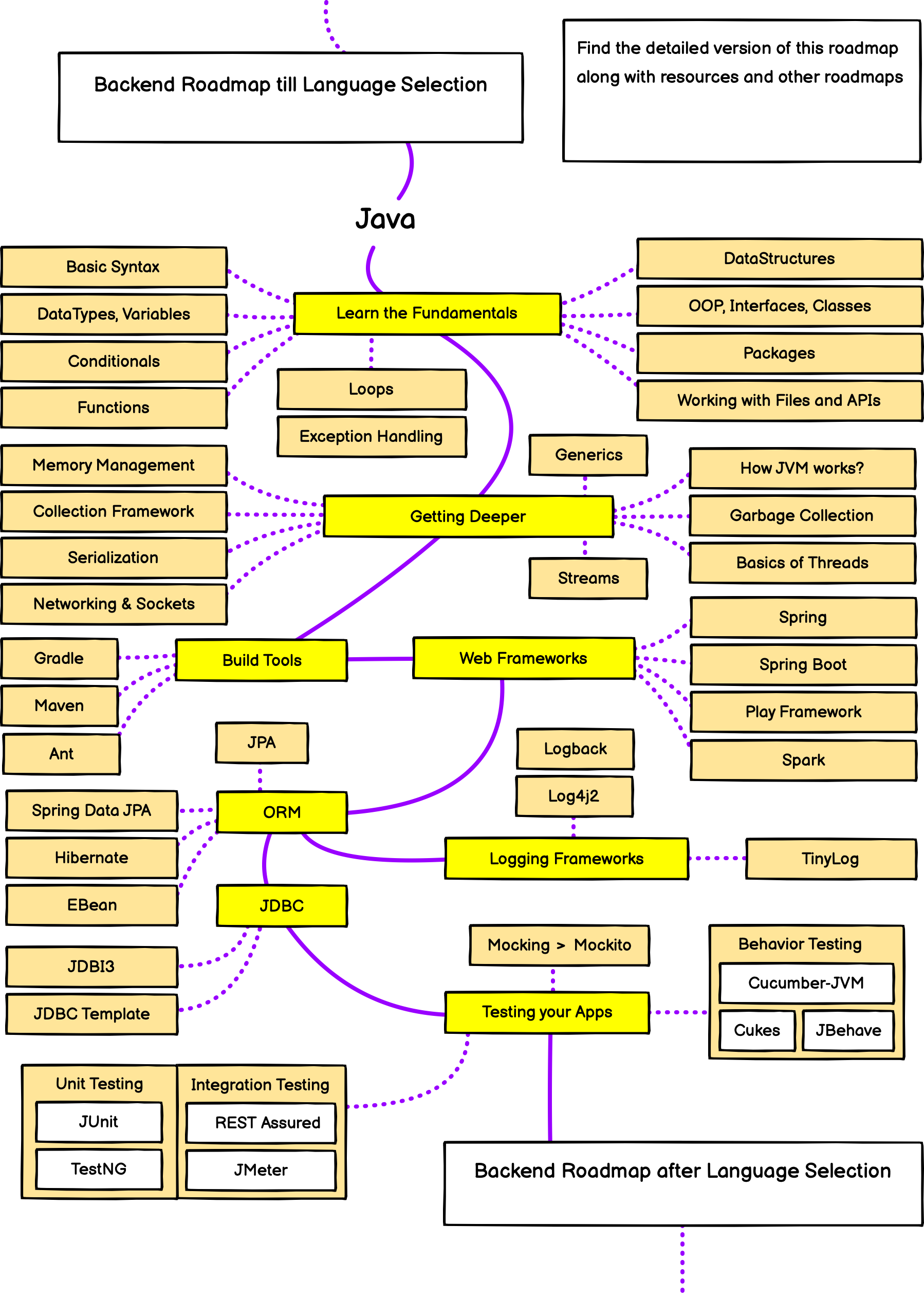

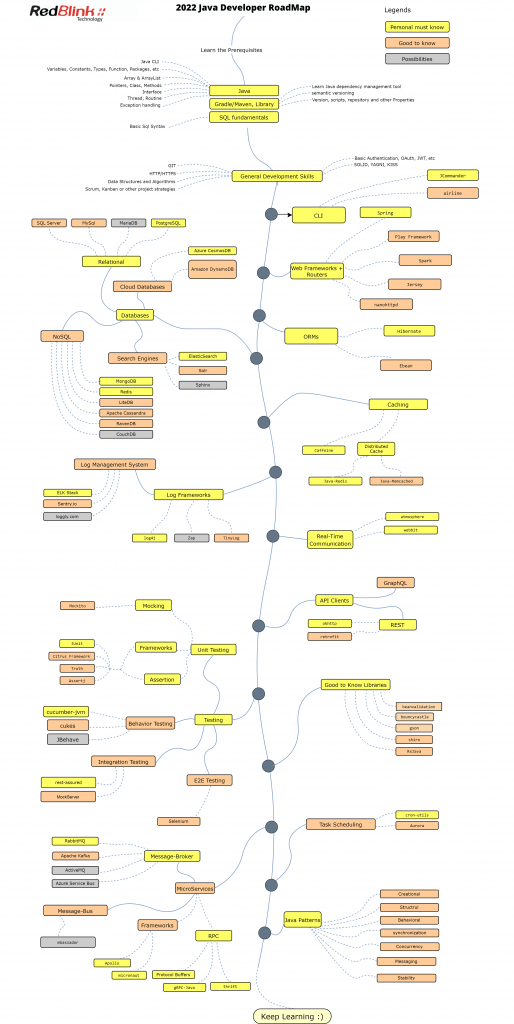

Java Developer Roadmap

Do you want to work with one of the most widely used programming languages in the business world?

Or, maybe you are aspiring to wear the hat of a full-stack Java developer for creating high-interactive applications?

If so, being a full stack Java developer might be the perfect fit.

This 2025 Java developer roadmap will help in reaching this step. Java developer is one of the listed and highly-paying IT jobs. And why not? In today's modern world, Java developers are essential whether it is about creating a stunning website, a high-performing e-commerce site, or other web-based application.

Even Java is one of the most sought-after coding languages in the industry. And as a career prospect, it is a most interesting job. But, since the industry is flooded with various candidates to capture the right opportunities, it has become a little tough for career enthusiasts to stand out from the crowd.

That’s why we bring a full stack 2025 Java developer roadmap for all career enthusiasts who are interested. We will cover all the latest skills necessary to stay competitive in 2025. After all, equipping yourself with the right Java programming skills is a great step towards a rewarding career in software development.

Let's explore some must-have skill sets to become a highly-qualified Java developer professional.

Who is a Full Stack Java Developer?

So, what does the term "full-stack Java programmer" mean?

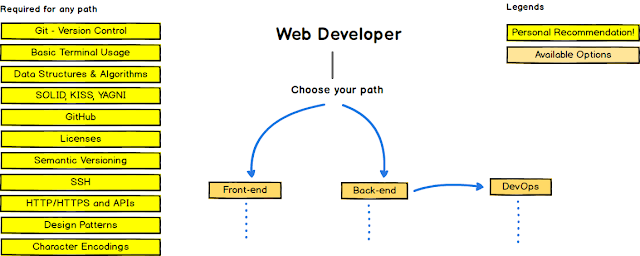

A programmer who has a command over both the frontend (client-side) and backend (server-side) of a website is known as a full- stack web developer.

The front-end developers are responsible for designing and developing the UI section of a web application whereas the Java backend developers work behind the scenes and run the requests which the user issues. Some backend services are logical processing, user validation, database handling, and server operations.

So, to become a full-stack Java developer, you need to be an expert in front-end and back-end technologies. Plus, you also have a general idea about the skills or technologies related to full-stack development.

Additionally, a full-stack developer is also skilled and fluent in multiple coding programs, has familiarity with databases, a talent for designing, UI/UX management, and a certain set of soft skills to excel.

What is the Role of a Full Stack Java Developer?

In the modern world, a full stack 2025 Java backend and front-end developer is counted as the most crucial position in technology. As we discussed above, a full-stack developer is a professional who has a command on both the front and back end of a web application/website.

Generally, the role of a full-stack developer is to develop user interface websites, databases, and handle servers. Such professionals can also work with the clients during the planning phase of the projects.

Here are the following duties and responsibilities when it comes to the roadmap of a Java developer:

- Knowledge of essential front-end technologies like HTML, CSS, JavaScript.

- Version control systems like GitHub, GitLab, BeanStalk.

- Database management and caching mechanism.

- Server and configuration management.

- At least one server-side programming language like Java, Python, PHP, Ruby, etc.

- The agile development approach to carry out the vision with multidisciplinary tasks without any hurry or disturbance.

- Design and code software applications from business and technical specifications.

- Develop and execute unit, component, and integration level tests to verify requirements are met.

- Ensure coding standards and product quality targets are met through the completion of code reviews.

- Investigate and resolve complex technical issues for assigned projects.

- Enable continuous improvement across the SDLC through the introduction of new technologies and processes.

- Create and review technical and end-user product documentation.

Nonetheless, as soon as you meet all the requirements stated above, take a step ahead on the full-stack java development roadmap.

Key Skills to Become a Successful Full Stack Java Developer

This Java developer learning roadmap will help in becoming a full stack developer. Well, there are a whole lot of technologies, including HTML, CSS, and JS to learn when it comes to Java.

Here's a roadmap to full stack Java development. Go through the article and discover which skills and tools to learn and which ones to learn first. There are a lot of full stack Java development tools out there and you need to be exposed to them before you make a decision on what you want to learn.

Another important thing that is always a part of your roadmap even if you are an expert is that you need to stay updated. The full stack Java trends for 2025 won’t be the same as those next year. There are a lot of new frameworks and tools introduced periodically.

You must keep up with the trends to become obsolete. Always look to what’s new and upcoming.

-

JavaScript

JavaScript is the ultimate tool, which helps full-stack developers in creating excellent websites and web applications. Whether you are thinking about adding a dynamic touch or want to natively run it in the browser and on the server-side, learning JavaScript must be your first step.

Through JavaScript, it becomes easy to modify HTML & CSS to update content, animate images, illustrations, create interactive maps, menus, and video players.

So, a full stack Java programmer must have detailed knowledge of JavaScript, React, and Angular, DOM, and JSON. Additionally, they also have great knowledge of new frameworks, libraries, and tools.

-

HTML/CSS

HTML is a Hypertext Markup Language used to add web content. Whereas, CSS (Cascading Style Sheets) is used to nail the style and appearance of the website. Being an expert full stack developer, you must have mastery over both programming languages to design a responsive website.

Both HTML and CSS help the developers in designing a website that is appealing to the eyes and easy to navigate.

-

Git & GitHub

Git is open-source software that contributes to accelerating growth and efficiency for both micro and massive projects. Through this software, a full stack developer can easily track even the minor changes made to applications, codes, websites, documents, and other project files.

Even having a GitHub profile helps skilled developers to work collaboratively. Hence, every Java developer should be aware of basic Git commands for accessing the opportunities of productivity, management, efficiency, and security.

-

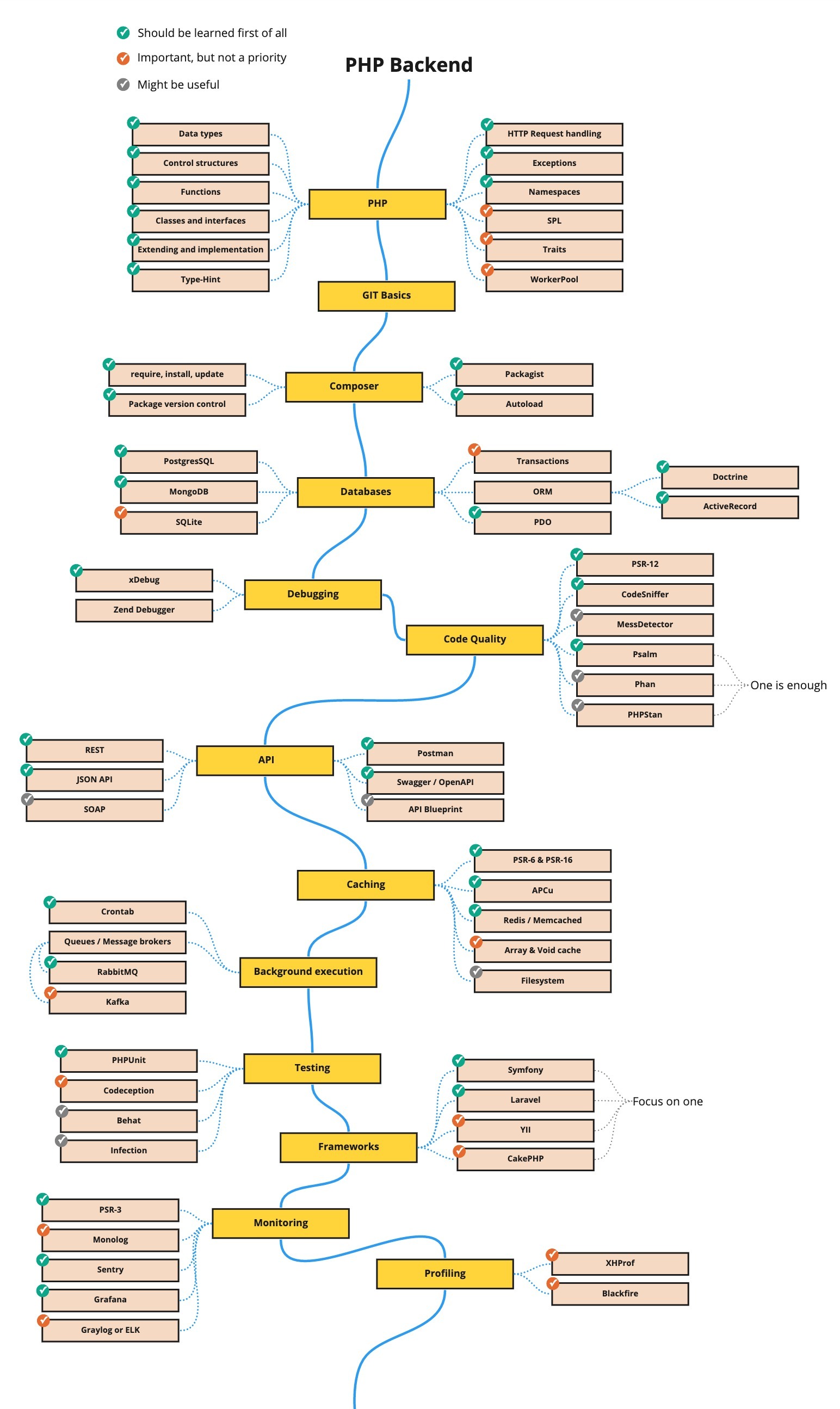

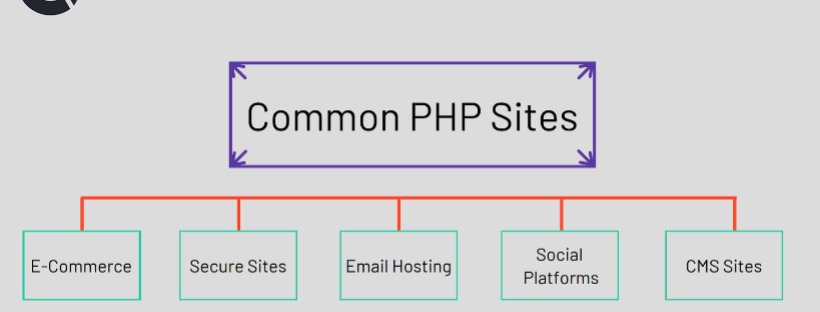

Backend Languages

A Java programmer should also have extensive knowledge of PHP, Python, Ruby, and the technicalities of web architecture. For establishing yourself as an experienced Java developer, you must also know - how to structure the code, categorize files, & collate the data in databases for building software applications & complex websites.

-

Databases & Web Storage

A database is necessary to store your projects securely. It assists the different teams to work collaboratively on the same project, which actively keeps developers aware of modifications. To store the data effectively and access it with ease in the future, full stack developers need to understand all the requirements of NoSQL, MySQL databases and web storage.

Moreover, experts must have in-depth knowledge of XML & JSON, relational and non-relational databases.

-

JSP & Servlets

JSP (Java Server Pages) is a back-end technology to create dynamic and platform-independent web applications. It supports dynamic content and has access to the whole Java API family. This is a must-learn technology.

On the other hand, it is also important to learn about servlets. The programs that are the middle layer, help in collecting input from the user, presenting information from the database, and loading dynamic content.

-

Spring Frameworks

Spring framework is another complete, lightweight tool that supports Aspect-Oriented Programming (AOP). This modular framework is useful for developers who work with MVC architecture.

Some popular spring frameworks are Spring MVC, Spring Boot, and Spring Cloud for developing a web application. So, every developer must have to master their skills in these Java frameworks. Does not matter which Java project you are handling, it provides all layers of security features equipped with authentication, verification, and validation.

-

Spring Boot

Spring Boot is one of the top five Java frameworks you should learn. It helps in creating Java applications by eliminating boilerplate code. Additionally, it removes the pain of using the Spring framework and makes it easier to use, plus cuts down the time you spend on bootstrapping.

-

DevOps Tools

In the current market, there is a huge demand for full stack developers who know about DevOps.

They are also familiar with its key lifecycle phases like continuous development, continuous integration, and continuous testing. Good knowledge of DevOps tools like Maven, Docker, Ansible, Kubernetes, and AWS is necessary for creating ideal environments and building scripts.

-

Web Architecture

Since the market is flooded with talent, it is necessary to have some complementary skills to enhance career-accelerating opportunities. That's why it is necessary to have the basics of web architecture.

Full-stack developers must have an extensive understanding of the structural elements and user interface elements of the web application, such as DNS or Domain Name System, Database Servers, Cloud Storage, and such.

-

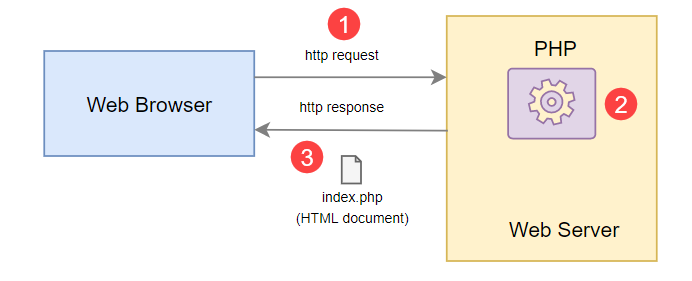

HTTP & REST

Full stack developers must have expertise in these two technologies for web development. HyperText Transmit Protocol (HTTP) enables the server to easily communicate with the client.

Representational State Transfer (REST) is a mediator between the frontend & the backend. So, every developer has a good command of these technologies.

-

UI/UX Design

In order to become a successful Full Stack developer, knowledge of designing is also recommended. Moreover, the person should know the principle of basic prototype design and UI/UX design.

-

Maven

Maven is the most essential Java tool. For some programmers coming from the pre-Maven world of ANT and Batch script, it is quite painful to assemble and manage dependencies for a Java application.

-

CI (Jenkins, Bamboo, GitLab, etc.)

In addition to Maven, Jenkins is another must-know skill for Java developers. With the advent of new technologies and processes of software development, many organizations are now using CI and CD. Even the beginning of DevOps has further fueled the adoption of Jenkins. So, we believe that every Java developer should adopt this skill.

Maven not only solved the problem of dependency management. But it also provides a standard structure for Java projects. As a result, it considerably shortens the learning curve for a new developer.

Hence, Maven is an absolute must-have skill for Java programmers.

-

Hibernate

Since Data plays a significant role in any Java application, Hibernate also becomes an essential framework that every Java developer should learn. It eliminates the pain of JDBC to interact with persistent technology like Relational databases and allows you to focus on building application logic using Objects. For learning Hibernate, enroll in Java courses today.

-

Microservices

Microservices using Spring Boot and Spring Cloud are in demand for Java developers. They must have this skill and be well-aware of Microservices. Generally, some monolithic applications are hard to build and maintain. But, microservices are much easier to code, develop, and maintain because they split an application into a set of smaller, composable fragments.

-

JUnit

We are here suggesting many full stack Java programmers learn JUnit for staying in competition. It is also an important thing that every Java developer should learn in 2025. This leading skill allows you to write tests better and faster. In addition to learning JUnit, you should also learn Mockito, a leading Java library for creating mock objects.

Since the creation of Java applications mostly depends on the library, including JDK, a mocking framework like Mockito is always required to write a test that can run in isolation.

-

TDD

TDD is also one of the important skills that every Java developer should learn nowadays. This is a quite deep down and single most practical skill for Java developers that can improve the quality of your code and increase confidence in your coding. TDD's test-code-test refactor cycle works fast and really well in Java. We strongly suggest every Java developer follow TDD.

-

Java SE

Java SE is one of the most important skills for any Java developer. If you are not aware of Collections, Multithreading, Streams, and other essential Java SE libraries, it is not possible to code in Java.

Having good knowledge of these necessary classes is significant for any Java developer. It will help in creating an effective code. So, if you are a beginner in Java courses, we highly recommend spending some time learning and improving core Java skills. Hence, take a look at the Java EE developer roadmap for being more professional.

-

Elastic Search

ElasticSearch is also a significant software in Java. It is a search engine based on the Lucene library and allows you to reliably search, analyze and visualize your data. Elasticsearch is often used together with Kibana, Beats, and Logstash, which form the Elastic Stack.

ElasticSearch is getting popular as more and more applications are now providing a way to analyze and visualize their data to the user. And therefore, a Java developer has ElasticSearch Skills.

Developers’ roadmap to Java is the most demanding. So, enhance your skills and become a high-paid Java developer.

Full Stack Java Developers Salaries and Job Opportunities

When IT companies hire a full stack Java developer, it is necessary to evaluate the budget and expenses. The salary of web developers depends on several factors, such as location, experience, present opportunities, and many other factors.

As discussed above, becoming a full stack developer means requiring specialization and picking up as many skills as possible.

According to talent.com, the average full stack developer salary in the USA is $110,686 per year or $56.49 per hour. Entry level positions start at $92,691 per year while most experienced workers make up to $145,000 per year.

Full Stack Java Developer Salary In Some Popular Cities of USA

Here is a list of Java developer salaries across different countries and a high rate of web development job opportunities. Meanwhile, the average salary range is based on skills and experience self-reported from Talent. Find reports below.

- Full Stack Java Developer Salary in Virginia - $126,750.

- Full Stack Java Developer Salary in California - $126,750.

- Full Stack Java Developer Salary in Massachusetts - $125,000.

- Full Stack Java Developer Salary in New York - $125,000.

- Full Stack Java Developer Salary in Washington - $122,200.

- Full Stack Java Developer Salary in Illinois - $120,000.

- Full Stack Java Developer Salary in Mississippi - $62,679.

Note: The above figures reported may not be accurate as they may vary depending on the demand.

And, here is the average full-stack developer’s salary:

- Indeed: $105k/year, based on 10.5k reported salaries

- Glassdoor: $99k/year, with a low of $63k and a high of $157k

- ZipRecruiter: $100k/year ($49/hour), with a low of $50k and a high of $155k

- PayScale: $79k/year, with a low of $54k and a high of $115k.

The salaries and job opportunities of full stack developers across different locations are approximate. And, salary depends on various factors such as skills, experience, company, speed of delivery, and location.

Demand for Full Stack Java Programmers

What’s the scope of full-stack development in 2025?

A full-stack developer’s career is the most demanding and fulfilling one. It involves a combination of work at the front-end and back-end to build website applications.

Due to the growing number of online platforms and digitally driven businesses, the demand for full-stack developers has been on the rise in recent years. Thus, as long as we have the need for developing website applications, the demand for full-stack web developers will remain high.

The rise of full stack developers is in high demand due to the growing number of online platforms and digitally-driven businesses. Hence, if you are planning to pursue your career in web development or Java development, this is the right time.

The Bureau of Labor Statistics (BLS) projects 8% job growth for web developers and designers from 2019-2029, double the national average for all occupations. These tech professionals build and manage websites for employers and clients.

Future Scope of Full Stack Java Developer

In terms of different revenues, you can expect within the full stack developer, the amount of experience you have can really help decide your pay packet. In the US, the starting salary for a senior full stack developer can be $30,000-60,000, which is pretty high to begin with.

Once you get your first junior full stack developer job, you can constantly look to expand your skills set, experience, and thus, salary. Whether it is about learning something new in Java or expanding your skills further, all this will raise your demand in the current and upcoming market. It will also keep you ahead of the competition.

Once you successfully prove that you are an experienced industry professional both inside your company and in the marketplace, a senior full stack developer grasps a high average salary.

Conclusion!

We suggest you go through this roadmap of Java developer’s skill set list that enhances your chances of staying ahead of the competition in 2025. Through these above-mentioned skill sets, you can easily write better Java programs and deliver faster.

This is what you want right?

So, what are you waiting for? This is our 2025 full-stack Java Developer Roadmap. Start learning different resources and become a better Java developer. We have kept it simple, so most people can follow it.

If you still have any questions, reach us today! We will assist you further in every possible way.

Angular Developer Roadmap 2024: Learn Angular Development

Angular Development Roadmap for Developers 2024 Guide

Do you want to become an Angular developer? Or maybe you are thinking of more featured web development using Angular. Check out this blog to know more

Generally, Angular has become one of the most popular free open-source frameworks. It is equipped with robust components for writing readable, easy-to-use, and maintainable code. Large organizations, such as Microsoft, Cisco, AT&T, Apple, GoPro, Udemy, Adobe, UpWork, Nike, Google, AWS, YouTube, and others have hired Angular experts. Being loaded with powerful features like component-based framework, it has even attained great demand in the market for building dynamic web applications.

Does Angular Development have Jobs?

BLS predicts a 25 percent rise in the number of jobs for software developers between 2020 and 2030. According to the US Bureau of Labor Statistics, the job outlook for Angular developers is 8% which is a lot higher than the average.

Even there are some technical reasons related to the businesses that make the Angular platform more popular in the entrepreneurs’ communities, such as:

-

Increase application development speed

-

Reduce development cost

-

SEO friendly development

-

Fast software release cycle

-

Boost ROI

And, this is what that big organization wants, right? Due to such facts, thousands of tech geeks are considering Angular as their career option and seeking to upskill.

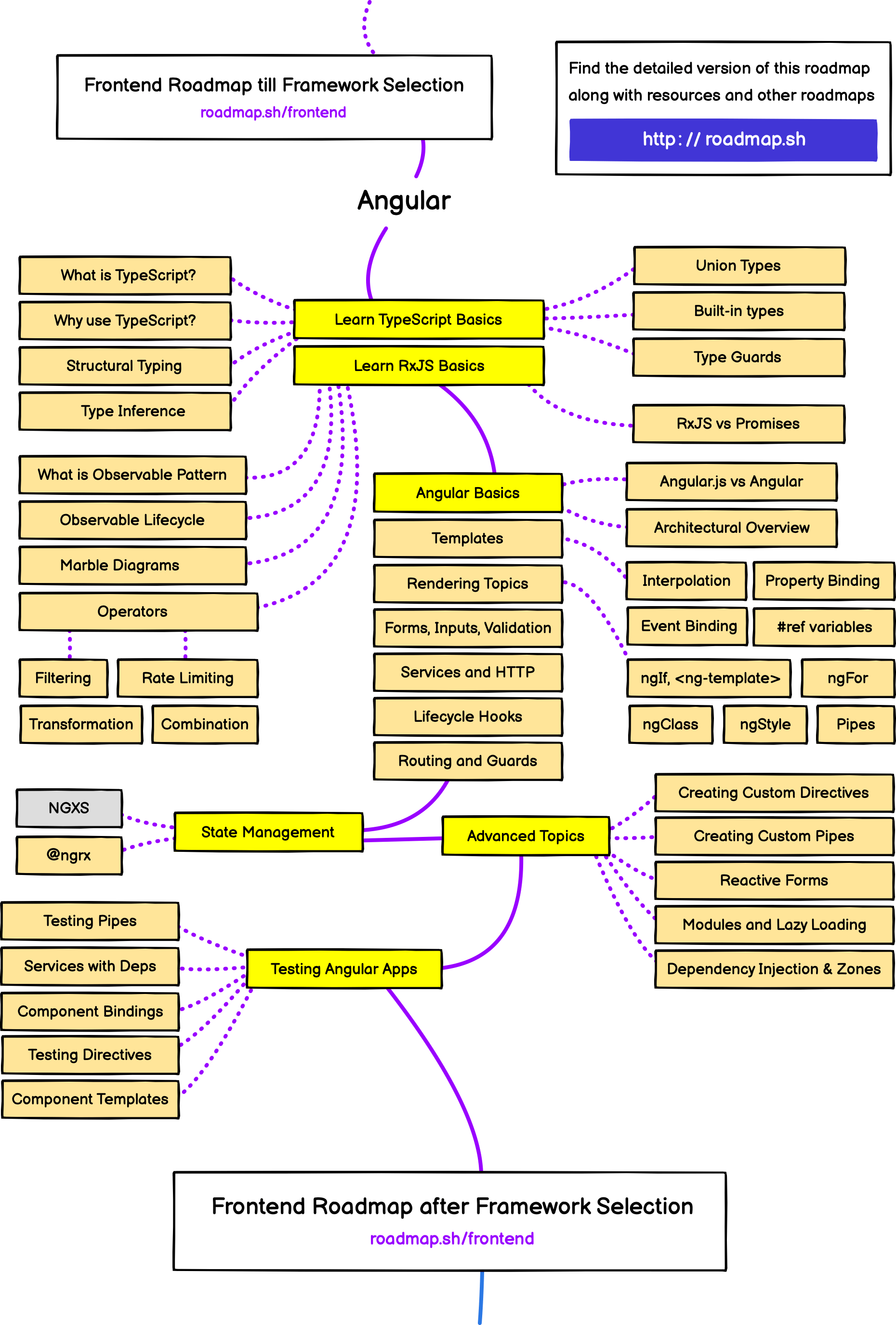

Yes, there is a lot to learn when it comes to the Angular web app development career path. But, a lot of us get stuck in the beginner's circle because some of us do not know where to start and what to search for.

By keeping this in mind, we create an effective Angular developer roadmap for beginners who are eager to start their careers as successful developers.

Why Should You Learn Angular?

To build a promising future in web development, we understand beginners must learn in detail about Angular and its numerous functions like how it works and what are its different components.

However, if you are interested in building your career, going through a challenging experience, or want to code and manage different projects in a higher organized manner, learning Angular is the best choice. Even various features of Angular give many reasons to tech geeks for picking this leading JS framework as their tool.

As we all know, Angular is supported by the tech giant Google. Despite this, it also includes detailed documentation where you can easily find all the essential information in detail. This further helps in providing quick solutions and resolving the issues within a second.

So, what else is special about learning Angular? And what sort of advantages does it bring to the table, which is important for developers to know. Well, an Angular developer:

-

Helps developers to keep their code clean and understandable.

-

Communicate with the back-end servers without refreshing the full webpage for the purposes of loading data in the application.

-

Provide comprehensive solutions for all kinds of needs for web applications, whether it is a single page, lightweight processing, or even complex logic.

-

Includes reusable components.

-

Provides data binding capability to HTML thus giving users a rich and responsive experience.

-

Angular helps in creating desktop-installed applications for Mac, Windows, and Linux.

-

Design progressive web applications and native mobile applications.

-

Has a powerful MVC architecture to develop single-page applications SPA efficiently

Our above-mentioned advantages can greatly benefit a beginner for learning Angular and becoming a successful Angular developer. Moreover, if you want to remain competitive in today's job market, you should learn Angular.

What is the Role of an Angular Developer?

So now, we know that Angular developers are the ones who are proficient JavaScript developers and hold the experience and theoretical knowledge in the field of software engineering.

As elaborated above, a person who wants to become an Angular developer must not just be an experienced JavaScript programmer but also must be proficient in and around the things of the Angular framework. After all, being a developer, our primary job should be to implement a complete user interface in the form of web and mobile apps.

What else can an Angular developer do? Here are the following responsibilities:

-

Designing a comprehensive and functional front end application

-

Guaranteeing the high performance of related applications across all platforms, such as desktop and mobile

-

Formulating tested, idiomatic, and documented elements of JavaScript, HTML, and CSS

-

Working with external API & data sources

-

Bug fixing and unit testing

-

Interacting with the back-end developers

-

Building the RESTful API

-

Having close communication with external web services

Hence, a developer can do more than creating and connect everything within the software system, including modules and different components.

How to Become a Successful Angular Developer?

Angular is widely used for web app development. However, there are some specific requirements that you must know for learning Angular. By checking off all requirements, you can make sure that you are moving in the right direction and learning the popular web framework in the best possible way.

The following requirements are necessary for becoming an Angular developer:

-

Know HTML, CSS, and JavaScript.

-

The basic idea of the MVC (Model-View-Controller) architecture.

-

Basic knowledge of Node.js and NPM (Node Package Manager).

-

Experience working with the command line.

-

Exposure to TypeScript.

Nonetheless, as soon as you meet all the requirements stated above, take a step ahead on the Angular development roadmap.

Here we start our roadmap to becoming an Angular developer. So, follow these below-mentioned steps for reaching proficiency and starting on an exciting new career.

-

Step 1: Introduction to Basic Programming Languages

Frontend development is about how we build user interfaces for the web. And for this, you must have strong knowledge of JavaScript as well as a great understanding of HTML/CSS.

The popular client-side libraries — React, Angular, and Vue — are all written in JavaScript. Knowing JavaScript thoroughly is crucial to leveling up and acquiring the skills to develop complex applications. Once you have a solid foundation in JavaScript, you should learn HTML and CSS.

The most comprehensive courses on web development start with HTML, CSS, and JavaScript, and how it interacts with the DOM, which further helps in building a full-stack web application.

-

Step 2: Learn JavaScript

JavaScript is the basic strategy through which you can build your Angular developer career. Angular's first foundation was based on JavaScript. However, the new iteration of Angular does not rely on JavaScript. That's why it is necessary to adopt every skill set and stay up-to-date with the latest features of Angular. So, start learning JavaScript. You might be confused about where to start. There are a lot of resources out there that help you in enhancing your skills and strengthening your basics.

A solid foundation in programming starts with JavaScript. It can be used for both frontend and backend developers which means you can become a full-stack developer while focusing on mastering only one language. We guide you through the core concepts of JavaScript by building real projects. Our team is dealing with real projects. And involving you in such projects is the best way to learn skills.

-

Step 3: Great Command Over TypeScript

For becoming a successful Angular developer, you should be proficient with TypeScript.

TypeScript is a primary language for Angular application development. It is a superset of JavaScript with design-time support for type safety and tooling. TypeScript simplifies JavaScript code, making it easier to read and debug. TypeScript is open source and makes code easier to read and understand.

Well, we personally recommend that having knowledge of TypeScript is a good skill. You can prepare for TypeScript through different sources or join our team for becoming a successful Angular developer.

-

Step 4: Start Focusing on Angular

Now, your next step is to learn the different components of Angular. In the beginning, you might find it difficult if you have never even seen this tool and are not even aware of the basics. For learning Angular, enroll in some online resources or take help from a professional Angular developer.

We, at RedBlink, have highly-qualified and skilled developers who will help in taking your career to the next level. Our developers develop progressive web applications through Angular. Such applications have high performance and work offline as well. Even we also build native mobile applications using Angular.

-

Step 5: Learn Angular Fundamentals

While learning Angular, we have to start with its fundamentals before writing coding in Angular. For example, a basic way of learning any language is to start with its alphabet. The same scenario goes with Angular.

As discussed above, Angular is a framework. It contains numerous tools and features that help in building a web app successfully. Hence, take classes, start with the basics of Angular, and do practice to have a ground on which you can start building projects.

There are plenty of high-quality free options to get started with the technical part. We are great at setting your foundation. Require more information? Feel free to take suggestions from our technical team. Contact us today!

-

Step 6: Do More Practice and Build Angular Applications

An essential step in the learning process for new developers is to do more practice of what they have learned. Do not wait for your first job. You can start building and publishing Angular apps on different platforms. If you want, you can also develop platform-specific apps using Angular code. Each Angular project you produce is another accomplishment in your portfolio.

Additionally, there are many online communities dedicated to Angular. By reading blogs, watching videos, or listening to podcasts, you can easily hone your skills and become a successful Angular developer. Even joining different communities is an ideal way to build business contacts and get acquainted with Angular best practices.

Necessary Skills For an Angular Developer

A true fact is that having strong technical skills guarantees years of stable employment. And since the market is fully-fledged with various AngularJS developer jobs, it is necessary to stay competitive and ahead. And, you also understand that modern software development requires much more than knowledge of your favourite programming language.

Being a successful Angular developer, you need to develop several different skills and apply them at the right time and the right place to create an application that everyone loves.

Here are some essential skills you need to succeed as an Angular developer.

-

Knowledge of HTML and CSS

Having a knowledge of basic elements will help in creating fast and functional applications. However, these applications still require a browser by creating user interfaces through HTML and CSS. For making changes to get a look, you can change the frames, but the basics such as HTML and CSS are forever!

-

Understanding of Core JavaScript

JavaScript is the core language necessary to learn if you want to work as an Angular developer. Having a grasp of JavaScript will allow developers to get rudimentary knowledge of other frameworks.

The HTML and CSS discussed above aim to design the user interface or presentation of the page, whereas JavaScript does the functionality. If you are planning on creating a unique app or website with lots of features like audio, video, and animation, you need JavaScript for adding such interactive features.

-

Command Over NPM (Node Package Manager)

NPM is a necessary tool for all web developers to install some web development packages. This primary tool handles thousands of client-side web development packages. It is a command-line tool that installs, updates, or uninstalls Node.js packages in your application.

-

In-depth knowledge of the Angular framework

Every Angular developer has to be skilled in this framework. Since the framework is regularly updated and comes up with a new version, it is necessary to keep up with knowledge and skills.

-

Good Command of TypeScript

Angular is available in TypeScript, which is a superset of JavaScript. It promotes strong typing and ends in fewer bugs. Hence, using the power of TypeScript to confidently refactor your code means your app can continue to grow and evolve as the needs of your users change.

-

Having Good Knowledge of RxJS

RxJS is a library for reactive programming with noticeable effects. It is bundled with the framework and used for many common tasks such as making HTTP requests for data. Angular uses observables and the other features of RxJS to provide a consistent API for performing asynchronous tasks.

-

Have Skills of Using Git

Building even simple applications without source control is a dangerous idea. As TypeScript allows you to refactor your code with confidence, Git enables you to add new application features and coding techniques. Using Git helps you evolve your apps safely with no fear of losing work or breaking existing functionality.

-

Keep an Eye on Every Detail

Developers who pay attention to minor details can immediately find mistakes - does not matter how small they are. It helps in writing high-quality code. So, you must have such skills in spotting mistakes in the early stages to save time and effort.

-

Establish Good Communication Skills

A qualified developer is well aware of some tactics to communicate with others. It helps in providing a good understanding of what other people want or require from the application. This is important to make every party satisfied.

-

Have Strong Teamwork Skills

Despite having great communication skills, it is imperative to have great teamwork skills. While working with others in a team, teamwork skills help in building communication levels and assist in learning more about Angular development. After all, sharing ideas, responsibilities, thoughts, tasks, and coordinating factors will create a successful application.

Future Scope For Angular Developer

Even Google Trends’ current job market suggests that learning Angular and React is effective nowadays. These technologies are leaders in the skillset of web developers.

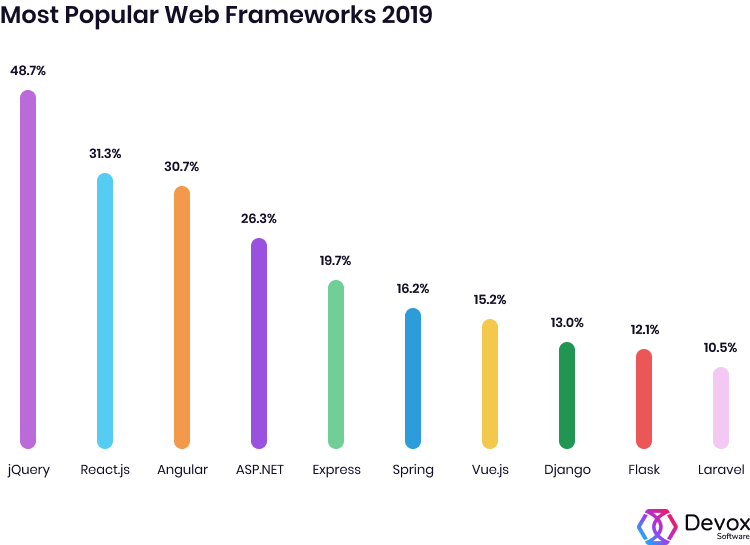

Angular is ranked as the second most popular technology and the best part is there is no sign of slowing down as you can see in the chart. Additionally, Angular is extremely popular and manages to make it into the top five of many surveys of best frameworks.

Have you ever wondered why?

Well, the actual fact is that this fronted framework tool is well-equipped with robust components to help developers write readable, manageable, and easy-to-use code.

Additionally, Angular helps in building interactive and dynamic single-page applications (SPAs) due to its compelling features including templating, two-way binding, modularization, RESTful API handling, dependency injection, and AJAX handling. Plus, you don’t need to rely on third-party libraries to build dynamic applications with Angular.

With the release of new updates and versions, Angular has become a major sensation across the world. Speaking of which, the future of Angular is extremely bright.

Its venerable features and components are capable enough to blow everyone away. The Angular team including both developers and designers are able to work together to build amazing single-page applications.

Over the years, Angular proved to be popular and helped thousands of large industries in building successful applications. Angular directives provided an easy way to create reusable HTML + CSS components. With constant review and evaluation to build their roadmap to prioritize requests, the future of Angular is bright.

The employment outlook for Angular developers shows predicted growth of approximately 31% between 2016 and 2026, a number that exceeds the demand for generic software developers in the marketplace. This ratio is much higher than the average employment projects of all other occupations in the United States of America.

It shows that the demand for web developers, especially those working with modern JavaScript, and typescript tools is increasing consistently due to the rich features and capabilities of the platform. Hence, it makes clear that this venerable framework still has a lot to offer, which has sustained demand in a wide range of industries.

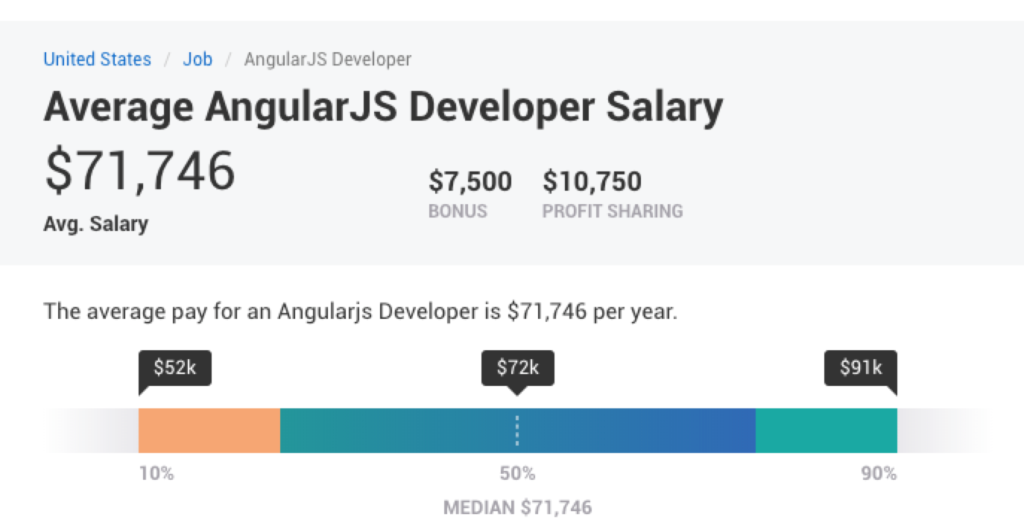

Angular Developer Salaries and Job Opportunities

When IT companies hire an Angular developer, it is necessary to evaluate the budget and expenses. An Angular developer's salary also depends on several factors, such as location, experience, present opportunities, and many other factors.

Let's discuss Angular developer Salaries and Angular developer job opportunities.

According to Talent, an Angular developer’s average salary in the USA is $114,989 per year or $58.97 per hour. Entry-level positions start at $97,500 per year while most experienced workers make up to $140,724 per year.

Angular Developer Salary In Some Popular Cities of USA

Here is a list of Angular developer salaries across different countries and a high rate of web development job opportunities. Meanwhile, the average salary range is based on skills and experience self-reported from Talent. Find reports below.

-

Angular Developer Salary in New York - $128,700

-

Angular Developer Salary in Oregon - $126,750

-

Angular Developer Salary in California - $125,000

-

Angular Developer Salary in Washington - $120,450

-

Angular Developer Salary in Virginia - $120,000

-

Angular Developer Salary in Massachusetts -

$117,500

Note: The above figures reported may not be accurate as they may vary depending on the demand.

Also, these salaries represent a good picture of the costs of hiring developers in different cities.

The salaries and job opportunities of Angular developers across different locations are approximate. Your salary depends on various factors such as skills, experience, company, speed of delivery, and location.

Angular Developer Job Opportunities

There are many Angular developer jobs available in the US and across the world. Thousands of recruiters post Angular developer job descriptions on their official recruitment sites to hire the right candidate for building web apps.

Thousands of businesses, government institutions, and our social interactions, all are moving towards the digital world. We all want to handle everything. And that's why since the year 2000, numerous websites have taken place across the internet. And, it is also predicted that the number of websites on the web increased from 17 million to over 1.6 billion in 2018.

Apart from that, the rise of the web as a platform has inspired businesses to shift online completely.

Unsurprisingly, it has produced a tremendous demand for web developers. According to the US Bureau of Labour Statistics, web development provided employment to 174,300 developers in the US alone. With this, the industry is predicted to grow 8% from 2019 to 2029, quicker than most other professions.

Let’s Learn Angular!

Many online communities offer various Angular training courses that enable you to master and hone your skills involved in front-end web development. It assists you to gain in-depth knowledge of concepts such as TypeScript, SPA, Directives, Pipes, and other above-mentioned components.

Whether you choose a self-paced learning program, online classroom, or start an internship with an IT organization, you need quality instructor-led online training, e-books, free binner courses, and a dozen FAQs.

Additionally, our team of highly-skilled developers also guides you and helps you in receiving your certificate of completion. Following our detailed roadmap for Angular developers will also assist in establishing a good career ahead.

Start your career with our Angular developer roadmap in 2024! For more detail, reach us as per your terms.

Frequently Asked Questions

Why do big companies use Angular?

Among all benefits, the Angular framework offers cleaner code, quick testing, better debugging, advanced CSS classes, consistency with reusability, functionality, and style binding, and many more. These all factors become the most compelling reason that allows the developers to lean a bit more towards Angular.

How do I get a job as an Angular developer?

By learning and honing your skills in Angular and its components, it becomes quite easy to start working as a beginner in an IT company. You must have knowledge of:

-

JavaScript

-

TypeScript

-

Angular Framework

-

Web markup, primarily focusing on HTML language, and CSS

-

RESTful API integration

-

Node and Webpack

Hence, having the right skill sets can lead you to get the job that you have always been seeking.

Is Angular developer a good career?

The demand for Angular developers is high because of the high scalability of the framework. Despite this, Angular is still in demand due to the continuous release, updates, and versions. Hence, choosing Angular developer as your career is the right choice.

How much does an Angular developer make?

According to Talent.com, the average Angular developer salary in the USA is $112,493 per year or $57,7 per hour.

It is a good number. However, the pay scale of every developer depends on his/her past experiences, skillset, type of projects worked on, qualifications, region, and certifications.

What is the role of an Angular developer?

Angular developers have the following responsibilities:

-

Designing a functional front end application

-

Building high-performance applications across all platforms

-

Writing impeccably tested and documented JavaScript, HTML, and CSS.

-

Research, analyze, and develop product features

-

Working with external API & data sources

-

Bug fixing and unit testing

-

Interacting with the back-end developers

Are Angular developers in demand?

Yes, Angular developers are still in demand. Angular frameworks are high in demand due to the ready-made templates or functions, which help in creating projects in a time-efficient manner. Despite this, it requires less coding that is easy to read and manage.

Another reason is that the frameworks are quite safe as you can test the code frequently and have a proper system in place.

iOS Developer Roadmap 2024 : Learn iOS Development

⭐ iOS App Developer Roadmap 2024

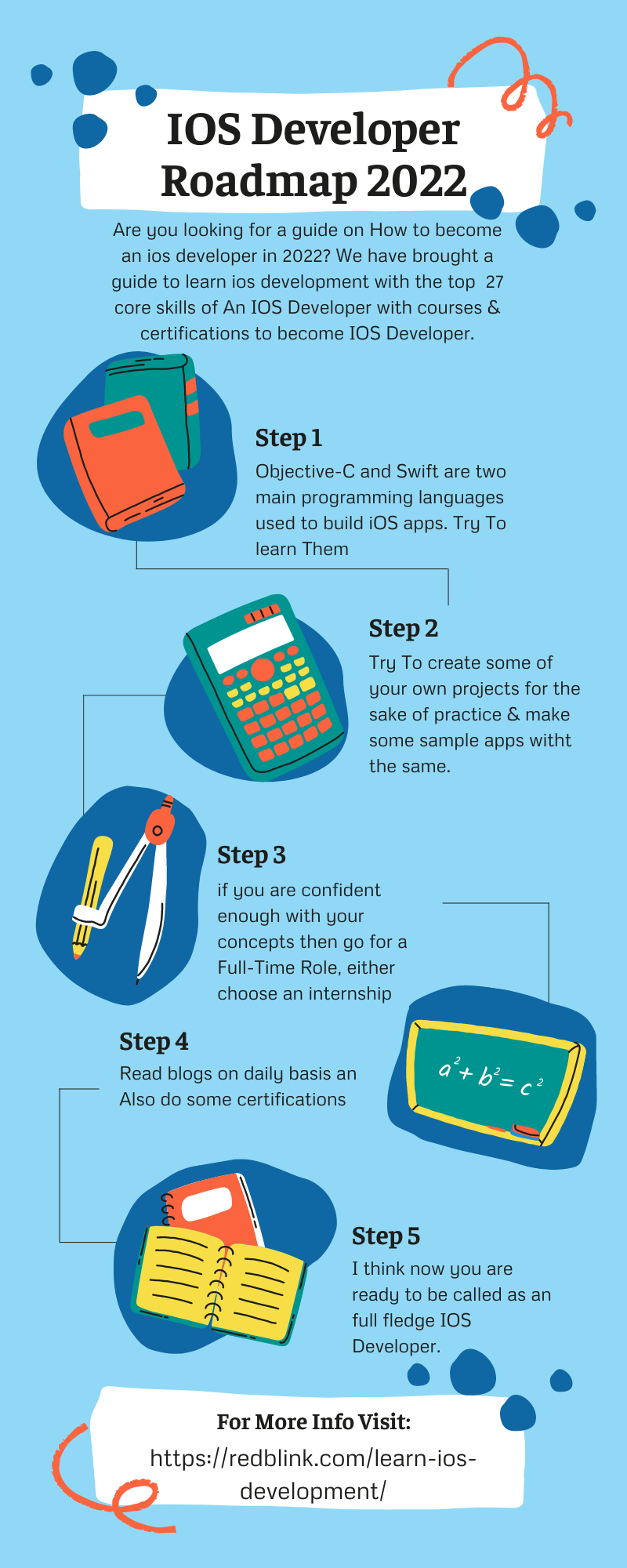

Are you looking for a step to step guide on How to become an iOS developer in 2024? We have brought an ultimate guide to learn iOS development with the top 27 core skills of An iOS Developer along with courses & certifications to become an iOS Developer.

The mobile app industry, especially iOS apps, is witnessing constant growth. There are signs of growth in the foreseeable future & different programming languages.

The last 6 years have shown positive growth in usage of mobile apps, the increase rate is 36%. More than 1.96 million apps are available in App Store to download. (Source: Statista)

This is encouraging news for mobile app developers. If you are considering your career as an iOS app developer, it is a good time to start building your skills.

Who Can Be An iOS Developer??

A candidate with an in-depth understanding of the iOS ecosystem, including a sound knowledge of the working of Apple devices is eligible to start. https://t.co/V6wh2xxFZq pic.twitter.com/XIY8TDWt5L— Nick Saint (@NickSaint19) December 10, 2021

The high salary and growth as an iOS developer are fascinating to start your career. Moreover, an iOS app developer is among the top paid IT professionals with a global average salary of $84,763.

This blog contains a roadmap designed for all IT or Non- IT Professionals who want to become iOS app developers by excelling in this field. Also, an overview to quickly grow in terms of salary and seniority has been convened.

Additional Notes:

The Ultimate Node.js Developer Roadmap In 2024

The Ultimate Python Developer Roadmap In 2024

The React Developers Roadmap in 2024

Who Can Be An iOS Developer?

A candidate with an in-depth understanding of the iOS ecosystem, including a sound knowledge of the working of Apple devices is eligible to start. Also, technical skills play an important role in becoming a pro iOS developer. All in all, different programming languages are necessary to become iOS Developer.

iOS Development: A Good Career Choice In 2024 Or Not?

Looking at the increasing popularity of the iOS platform, it is safe to say that a career in iOS application development is a good decision.

There is a rapid increase in the popularity of Apple devices like Apple’s iPhone, iPad, iPod, and the macOS platform. Due to this growth, the experienced, as well as newbies to programming, are entering the iOS application development field.

Increasing job opportunities and good pay packages are alluring factors to plan a better professional career in the field of mobile app development.

Top 5 Reasons To become an iOS Developer

If you dream to work in an established tech company or startup or like to do freelancing, either of them is a great choice. Here are the top reasons why one should become an iOS Developer in 2024:

?iOS Environment is a more integrated and urban mobile app development platform (With the compatibility with Android devices, the popularity is going to increase)

?iOS development tools are easy to learn and you can quickly start developing apps using in-built app development features in Apple devices.

?iOS developers are highly paid & most demanded ones.

?Teaching Code site and Swift UI tutorials are two great resources provided to help the new folks to learn iOS easily.

?Now, coming back to the demand of iOS developers, it is growing rapidly as the use of Apple devices is increasing.

?Apple Inc. is nowadays providing cross-platform support features that raise the demand for iOS applications. So, if you have proficiency in coding in the” C” language, you should start learning iOS app development languages. Objective C is the basic language for iOS app development.

?Additional Notes:

Why Should I become iOS Developer?

Is iOS Developer a Good Career?

How an iOS Developer can Help your business?

Is it worth being an iOS developer?

How to start learning iOS development in 2024?

iOS app development is well-received with its elegant, consistent operation and concise interface. Apple has released a concise document for human-computer interaction called the human-interface guidelines to help developers design the app.

Ultimate Cheat Sheets Compilation For Beginner Developers

Ultimate Cheat Sheets Compilation For Beginner Developers

Referring to this guide, here are a few of the must-have skills for an aspiring iOS developer:

iOS Developer Online Classes, Certifications & Courses

To start with, you can do an online course to learn Apple’s Programming Environment for app development. You’ll learn how to use AutoLayout, UIButtons, and UILabels to create an interface. Also, you will learn to react to touch events in an app using ViewController and multiple views. Audio and video settings in an app environment are also part of online Apple courses.

To start with, you can do an online course to learn Apple’s Programming Environment for app development. You’ll learn how to use AutoLayout, UIButtons, and UILabels to create an interface. Also, you will learn to react to touch events in an app using ViewController and multiple views. Audio and video settings in an app environment are also part of online Apple courses.

The courses are for 2-4 weeks or 6 months duration. The cost of the course depends on the duration of the classes and the curriculum.

Online Courses For iOS Development

iOS App Development with Swift Specialization

The Complete iOS 15 / iOS 14 Developer Course - and SwiftUI!

SwiftUI - Learn How to Build Beautiful, Robust, Apps

SwiftUI Chat App | MVVM | Cloud Firestore | iOS 14 | Swift 5

Apart from Apple, IT organizations working on the iOS platform offer internships and online courses for professionals. They also provide experience in real-time app development.

In addition to learning swift, an iOS developer needs to learn about UI designing and databases. The certification courses in these areas would help you get a better job.

So why wait to become a qualified Apple mobile app developer, enroll yourself in a Certified iOS App Developer Training Course.

Pro Tip;

Some beginners think that the costlier course will help them become better iOS developers. This is purely a myth. Look for a course that’s offering practical experience of working on apps that are stored on the Apple Store

27 Core Skills of an iOS Developer In 2024

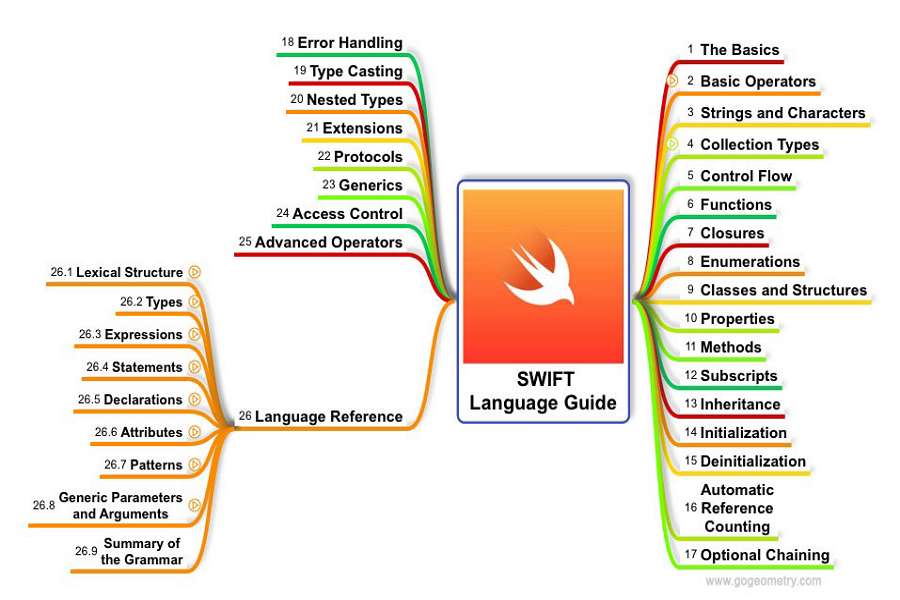

1. Swift Programming Language

This is the core programming language from Apple like JavaScript. You use it to create variables, write functions, and so on; it’s just pure code. It is a new language developed for the Apple app framework adopting the concepts of c++ language.

2. SwiftUI

It is a framework from Apple that lets developers write apps for iOS, macOS, tvOS, and even watchOS using Swift. So, while Swift is the programming language, SwiftUI is the set of tools to show pictures, text, buttons, text boxes, tables of data, and more in iOS apps. SwiftUI is becoming essential to add UI features in iOS apps. With the launch of iOS 14 widgets, it has become a necessary skill for iOS app development.

3. Networking and Working with data

Developing good networking skills helps in managing apps Networking is the practice of fetching data from the internet or sending data from the local device

There are numerous ways of doing this, but honestly the absolute least you need to know is how to fetch JSON from a server.

Also, converting data from the server using your network code into useful information is a required skill of an app developer. Maintaining the speed of the app is included in the programming profile.

4. Version control

Knowledge of version control like managing GitHub is an added skill for an efficient iOS programmer. Also, GIT architecture is a very wide & detailed concept.

By learning these skills you can start developing iOS apps. However, in the app development market, the more you practice, the better skill you develop.

Now, let’s make the learning process simpler by breaking down the tips into smaller chunks.

5. Learning XCode and Interface Builder

Developing XCode knowledge for building user experience is a must. Also, many of the Apple devices apps use the Cocoa Touch framework. Therefore, try learning several interfaces Game Changer, Passbook, etc. for becoming an efficient iOS developer.

6. Auto Layout setting Efficiency

Apart from coding, a mobile app developer must have expert-level skills in setting layouts. The mobile programming is based on element setting To have control over the layout with wider customization, trying auto-layout methods is a must.

7. Knowledge of Containers and Storyboards

To create operational apps, learning the use of containers and Storyboard is a plus. It helps in bugs fixing, providing better navigation and app flow management.

8. Implementation of Table Views

To add more dynamic functionality in the iOS apps, a programmer must have in-depth knowledge of the table view & different programming languages.

9. Database Experts

Database integration is the backbone of an app. Some of the popular databases that are deployed in iOS development are Realm, SQLite, and Core Data. Knowing the interfacing with these packages, iOS developers can easily link up the apps with databases.

10. Spatial Reasoning Ability

Unparalleled user experience is a must-have feature of an iOS app. To design an intuitive interface and compute better functionalities, spatial reasoning ability is an added advantage. Helps in creating profitable and productive apps.

11. Develop Design Skills

Apple devices provide excellent designs and look and feel as a whole. Also, designing plays' crucial role in the ranking of these apps too. At the beginner's level, it is difficult to run. But one must gradually develop design skills to become a successful iOS programmer.

12. Proficiency in Grand Central Dispatch

GCD or Grand Central dispatch allows you to add concurrency to the apps. As iOS applications perform multiple tasks at the same time including pulling data from the network, displaying relevant information on the screen, reading touch inputs, and a lot more, running all these at the same time can be quite stressful on the handset.. Concurrent programming skills will make one efficient to handle this multitasking using GCD.

13. Integration with Data

14. Incorporating third-party APIs

To work on cross-platform apps and to speed up the app development process, APIs are used in the Apple development ecosystem. An iOS coder must know about APIs to work diligently while choosing the API.

15. Testing Experience

To work as a good team player, it is must adapt testing practices. The code is checked on various parameters. The standardization helps to gain more knowledge of developing apps and code with the help of different programming languages that is understandable by other users.

16. Skilled in Source Control

Source code means keeping control of all the changes that are made in the source code. Learning source code management systems will reduce and resolve all the conflicts that arise during the source integration. A good hands-on experience in source control helps the developers to collaborate with the team and streamline the development phase.

17. Knowledge of Concurrency

Efficiently applying concurrency in apps allows complex apps to run flawlessly. So, iOS developers must incorporate concurrency and parallelism into their code.

18. Memory Management skills

iPhone and iPad devices are resource-constrained on memory. A pre-processing limit to run a code is set. so, focusing on memory use is one of the considerations that an iOS developer must learn.

19. Great communication skills

To understand the client’s requirements and to work in a technical team, an iOS Developer must develop good communication skills. These skills will accelerate your growth as an app developer.

20. Problem-solving ability

Every iOS app has some kind of complexity involved in it. The problem-solving skills are not a choice but a necessity for a successful iOS Developer.

TIP: To develop these programming languages skills, review the apps ranking on Apple Store.

21. Team player attitude

App development is teamwork. To develop these skills, you must know to share ideas with other team members and work alongside them. Listening, learning, and understanding others' points of view help you become a valuable team player.

22. Creativity and Innovation

To excel in competition among other developers, an iOS developer should develop creative and innovative skills. One must have the ability to develop user-friendly and functional iOS apps.

23. Discipline and dedication

A professional developer's job is never smooth sailing. At the entry-level, learning is more important whereas, with the goring level, app management skills become a part of your job. You can do this only when you are a hardworking and disciplined professional with a learning attitude.

24. Research Approach

For an iOS developer, it is a must to keep updated and try researching new technologies and methods. This is essential as there are rapid changes in the Apple ecosystem. GUI and cross-application development are new areas that one must explore.

25. Thinking Ability

A rational thinking approach will help you develop analyzing power and find the solutions. To solve complexity and to think from the end user’s view, developing a sharp thinking mind is a must.

26. Appealing Portfolio

An iOS developer is a highly paid professional. Numerous app development companies look for talented and skilled professionals. So, to provide a preview of your knowledge, one must design a portfolio mentioning the apps and their links on the Apple Store.

27. Job Search

Finally, you need to develop an optimistic and practical approach to get a good job. Sometimes you need to take the help of job consultancies, references, etc. Here, your social media participation like creating a LinkedIn profile will also help.

Latest iOS Developer Job Opportunities & salaries In 2024

One of the required skills of an iOS app developer is the ability to do smart coding. The way you craft your code and make it logical as per the Apple devices matters a lot.

It is pretty clear – The better and smarter you code, the better job you will get!

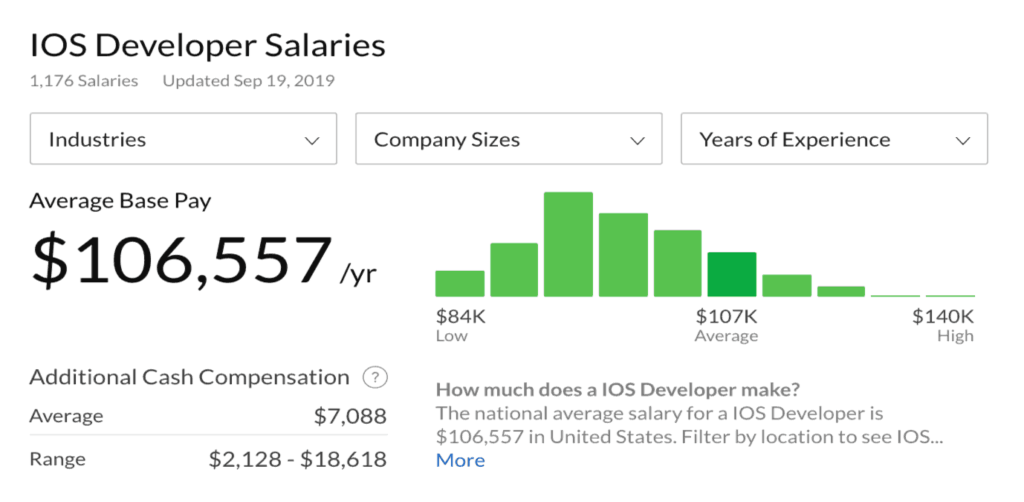

When it comes to iOS app developer salaries, the average reported annual salary as per Glassdoor is $94,360, and at Indeed it is $119,585 per year. However, the salary depends on skill proficiency varying from the company’s level.

To help you find a better job, here are some iOS development thumb rules and design principles:

Basic iOS App Development Rules

?The operation should be easy and fluid

?The navigation structure should be clear.

?Ensure aesthetics integrity

?The syntax of the code must be simple and logical

?The primary function should be highlighted

?Consider the direction (Users sometimes use the portrait mode, and sometimes use the horizontal mode)

?The touchpoint should be suitable for the finger-tip size (44 X 44 pixels)

Latest Guidelines on iOS Development Design Principles

?Special attention to be paid to the screen size and screen resolution

?Four basic UI components (Navigation, Status bar, Content, Submenu)

?Icon size should be kept consistent

?Font-size should be made comfortable to read

?Slicing should be focussed

10 Tips for Getting Hired as iOS Developer in 2024

The iOS developer’s job is a skill-based job. You can spend your time developing your skills to develop Ui/UX iOS apps and can get a job in your dream company. Some of the qualities which you must develop are:

?Always try to develop better skills by learning new techniques.

?Keep a track of the newly developed top ranking apps on AppleStore

?Develop the passion to think like an end-user and develop user-friendly apps

?Set your goals and keep looking for the job skills demanded by the top app development companies

?Learn interpersonal skills to become a good team member.

?Become independent and try to grasp knowledge to a level where you can independently develop iOS apps.

?Remember, a growing iOS developer has these three significant qualities

?logical thinking and problem-solving approach;

?spatial reasoning and system design knowledge;

?Abstract thought, imagination, and creativity to develop new solutions.

Above all, to become the first choice of the iOS App Development Companies, work with passion developing a mindset to think out-of-the-box solutions.

Top 10 iOS Developer Interview Questions, Cheat Sheet 2024

Being an iOS Developer is I would like to suggest to you some basic & advanced questions for the iOS developer interview. So some of them are:

1. How to achieve concurrency in iOS?

The three ways to achieve concurrency in iOS are:

- Dispatch queues

- Threads

- Operation queues

2. UIKit classes should be used from the which application thread?

They can only be used by the application’s main thread.

3. Which is the state an app reaches briefly on its way to being suspended?

An app enters the background state briefly on its way to being suspended.

4. Define the layer objects in brief?

5. Mention some important data types of Objective C?

They are 4 which are as follows:

- NSintegar

- Bool

- CGfloat

- NSstring

Additional Notes:

iOS Developer Interview Questions (SOLVED and EXPLAINED)

Over 150 iOS interview questions

14 Essential iOS Interview Questions

iOS Interview Questions for Senior Developers in 2024

6. Which programming languages are used for iOS development?

Programming languages used for iOS development are:

- HTML5

- .NET

- C

- Swift

- Javascript

- Objective-C

7. When we can say that this app is said into not running the state?

Basically, when it is not launched or gets terminated by the system while running.

8. When do we use the category?

We add a set of related methods and add additional methods in the Cocoa framework.

9. What is the full form of KVO?

KVO stands for key-value observing, which enables a controller to see all the changes to property value.

10. How to open the Code Snippet Library in Xcode?

Simply follow this step & you will be able to do so:

CMD+OTP+Cntrl+2

Conclusion:

There is a lot of demand for iOS Developers today. To look for a rewarding career in this field, try developing apps that are unique and can stay among the best iOS apps on the Apple store.

Once you are confident about your skills, start applying for the job. Prepare your resume as per the demand of app development companies.

If finding a career in iOS development is your goal, be open to working in a company that can provide a real-time experience of building a competitive app. This experience will help you crack the interview for your dream job!

FAQs - iOS App Developer Roadmap 2024

Q1: What are the skills required for iOS Developer?

iOS Developer require knowledge in following areas:

- Objective C and Swift (Cocoa Touch, Cocoa, and Cocoa Touch Frameworks)

- Multithreading and concurrency

- Database management (SQLite)

- Networking, JSON and XML parsing

- Security best practices

Q2: How long does it take to become an iOS Developer?

Becoming an iOS developer can be a challenging journey. There are many factors that come into play when deciding how long it will take you to become an iOS developer. This article is not meant to discourage you, but rather inform you of what to expect as you build your skillset and prepare yourself for the job market.

The first thing to consider is whether you want to learn Objective-C or Swift. Objective-C was Apple's original programming language for the iPhone, while Swift was introduced in 2014 as a replacement for Objective-C. Swift has been gaining popularity since its release and has been adopted by most major tech companies including Apple, Google and IBM.

Q3: Is it hard to become an iOS Developer?

It's not hard, but it takes time and effort. You need to know your way around Objective-C, the native programming language for iOS development, and at least one other language like C or Python. You'll also need to learn about things like memory management, data structures and algorithms. Finally, you'll need to study up on UI design principles so that you can make apps that look good and work well.

But don't let that scare you off! If you're willing to put in the time and effort, becoming an app developer is much easier than you might think.

Q4: Is App development a good career in 2024?

App development is one of the most lucrative career options in the world. It can be a great option for anyone who wants to build their own app, but doesn't have the time or money to do so.

App development is a lucrative career option in 2024 as well. With over 2 million apps available on Google Play Store and Apple App store, it's getting harder and harder for developers to create an app that will stand out from the crowd.

As technology improves, so does our access to it. Our smartphones have become more powerful than ever before, allowing us to use them for almost anything: watching movies and TV shows, playing games, listening to music, reading books and magazines, watching live sports events etc.

This has created a huge demand for mobile apps that can help us get things done wherever we are — whether it's booking flights or hotels or finding nearby restaurants or stores — all with just a few taps on our screen

10 Best AI SEO Tools To Get Your Content Ranked #1 In Google

AI SEO Tools

It’s exciting to see how SEO, NLP, and AI will evolve together in 2023. Modern websites are ranked by search engines based on the quality and uniqueness of the content. The content recognition of websites depends on the AI-based algorithms.

If you aim to have a top-ranking site, you need to catch the working of these algorithms and the frequent changes happening in the search engine working.

To dominate the ranking, you need to implement automated SEO tools so that you can make rapid changes in content and re-work SEO. Also, Do You Know How to use Twitter to Rank High In Google?

Natural language processing (NLP) and other AI tools can optimize website content. With this, you can expect top search rankings and stay there for a long time.

This post aims to give you an overview of the AI-based content and SEO tools that are likely to be popular in 2023 and will likely continue to be useful in 2024.

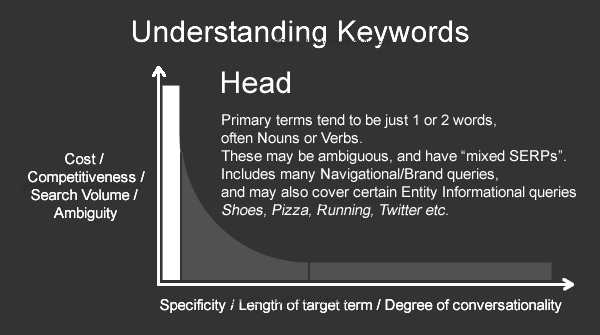

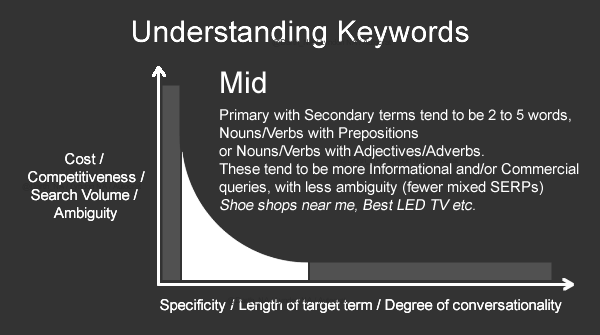

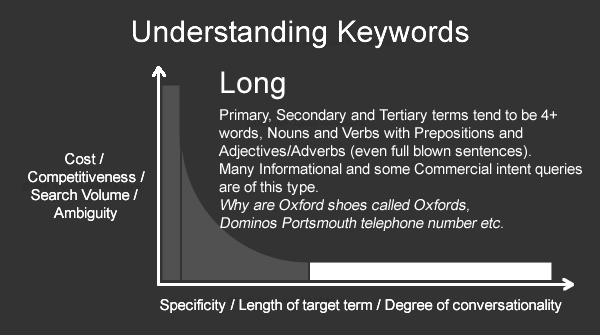

In this article, we will talk about how AI SEO tools are really helpful for Automation & Speedy Content Creation. AI SEO may be changing the way marketers help their websites rank higher on search engine results pages (SERPs). From Understanding the nature of Keywords to keywords relation with SERP, we have covering it all.

If you're interested in using an AI tool, there's probably a lot more information out there than you want. This guide is designed to give you a quick and easy way to pick out the most powerful tools available, so that you know exactly what factors should be considered when deciding which tool is right for your site.

Best AI SEO & Content Tools 2024

The purpose of content creation is not only branding but educating the readers about the new innovations. Now generating content that is SEO and user friendly. Now, the question arises, how to generate optimized content?

The AI-based content tools are the answer for it. These tools define entities, correct grammar mistakes, do keyword optimization, help create plagiarism and error-free content.

With the aim to help the writers, here are the list of top 10 AI based content and SEO tools are discussed:

Recommended Tool - (Tried And Tested)

Content Polish

Most SEO tools are focused on organizing you before and while you write content:

- Keyword research

- Content planning

- Keyword density optimization

There is not a really good tool for optimizing existing content:

- Title and meta suggestions

- New content ideas

- The right FAQ questions to add

- Topical authority articles to write

But Content Polish Does That, it helps you drive you more search traffic by giving you exact suggestions to implement on your blog post.

1. Neural text

Neural Text is a helpful tool to provide the entities and keyword research on a specific topic. The web references with the specification of H1, H2 tags help you quickly generate SEO-friendly content.

The created copy of the content is unique and understandable by a common user. Professional content writers use this tool to find out new innovations in creating blogs and articles. The web search is based on the organic search

Udemy

Advanced AI: Deep Reinforcement Learning in Python

This is a Complete Guide to Mastering Artificial Intelligence using Deep Learning and Neural Networks

Pros

Powered by GPT-3 from OpenAI

Helps write useful and informative content in a user-friendly manner

Powered by GPT-3 from OpenAI

Available in 25 languages

Cons

The free version is available for a small-time

Sometimes the search shows irrelevant results.

2. Copysmith

Copysmith is an AI-based content tool developed using GPT-3 to write product descriptions. The tool is developed by a team of copywriters, marketing experts, and AI researchers from the world’s top IT organizations.

Copysmith supports writers to write quality content in a speedy manner. To check the working of the tool in detail, you can download the free trial. However, you can get 35% off on starting with a Pro version on black Friday.

Pros

Easy to use

Speedy optimized content generation

Cons

Most of the feature is available in the Pro version

Require exact keyword research otherwise, the results are not displayed.

3. Writersonic

The tools help to create simple yet effective SEO-optimized content. The content generated using the tool is updated with the keywords research. The tool helps you write content for websites, landing page optimization, blogs, and articles.

Pros

Help you write meta descriptions, social media ads, and headers

You can edit, copy, share and launch your generated copy of the content.

Cons

You need to learn to use the tool to create effective content

The free version is only for a limited time.

4. Jasper

If you want to automate the process of content writing and make it SEO-friendly, Jarvis is one of the best tools.

The tool works not just for articles but also for social media posts, marketing copy, and more. In addition, Jarvis supports more than 25 international languages.

Pros:

Fast and automated content generation

Affordable AI-based content generation tool

Cons:

No long-form content with the starter plan

Requires you to pay extra for each additional user

No free tier or trial

5. Inlinks

Inlinks helps you generate entity-based content that is fully optimized as per the SEO requirements. It helps to top-rank your content in search engines.

The semantically related ideas from the Google knowledge graph help you analyze content on various parameters.

By scouring any common words and phrases, Inlinks automatically identifies entities for entity SEO.

Pros:

Easy to use content tool

Optimized results and helps in creating a high-quality content

Cons

The free version is only for a short period.

Paid plans are costly

One needs to learn using the tool otherwise the results will be off-topic

6. MarketMuse

MarketMuse is one of the best SEO automation tools but one of the most expansive tools. out there, however, it’s also one of the most expensive ones. However, MarketMuse offers a free tier that you can use for as long as you want without taking a paid plan.

Keyword generation and search optimization help you generate top-quality content. The content editor tool helps to do the right keyword insertion so that the content can have high SERP.

Pros:

Good keyword suggestions

Lots of useful features

Generous free tier

Cons:

Mostly aimed at enterprises and marketers

Paid plans are very expensive

7. ContentPace

Contentpace automates keyword research and optimization processes. The results obtained from the search engines providing information on the high-ranked websites are useful. Contentpace works by generating a report on the keyword.

It creates a content brief defining the entities. The whole content generation process improves the quality of the content. Thus we can say, Contentpace is a good AI hand for bloggers, writers, and SEO executives.

Pros

Result Driven SEO and content generation tool

User-friendly, high-quality tool

Free version available

Cons

A paid subscription is costly

Elaborated results-driven tool which is not required always

Complex for beginners

8. TextRazor

The tool helps to extract the entities and keywords for a specific topicTextRazor offers a complete cloud or self-hosted text analysis. Natural language processing techniques are used. This helps in creating unique and SEO content that can rank on the search engines.

Pros

Advanced content generation tool with API

Free version available for a short time

Cons

Knowledge of content optimization is required to use the tool

Advanced features is not available for the free version

9. Frase

Frase is described as an AI-powered SEO content creation tool. The peculiarity of Frase is that it uses its own AI called Frase NLG (Natural Language Generation), not GPT-3. The tool is designed to create top-ranked content.

Frase allows the writers to create their workspace. You can choose the length, keywords insertion, and other creativity parameters. It will quickly help you generate the content.

Pros

Optimized tool for blog writing

The tool allows doing SEO analytics

Cons

Most of the features work on the paid subscription

Still under development stage

10. Rytr

Rytr is another popular AI writing tool to help us create anything from YouTube video descriptions to social media bios. The tool offers easy and simple ways to write blogs as per the SEO requirement.

The tool is specially designed to create technical content. However, it is used more for creating landing pages with keyword optimization. The tool helps you create high-quality content. The content scores on parameters of readability and search optimization are high.

Pros

Allows you to write lengthy website or landing pages or white papers content

Helps writers to create top quality SEO optimized content

Cons

Designed to create long content

The free trial is for a short time.

AI for SEO Content Creation And Optimization

Content creation and SEO practices revolve around NLP (Natural Language Processing) techniques. NLP works in three stages: recognizing text, understanding text, and generating text.

Recognition - The process starts by knowing the length of the text. An NLP converts text into a number so that computers can understand them.

Understanding – Converted text in numbers undergoes statistical analysis to discover the most frequently used words in the concerned context.

Generation: The NLP tools try to understand the Keywords, questions, text, and other content related to a specific topic.

Now a writer’s job is to wrap the text around the results produced by the Google NLP tool. The automated AI tools help to create appropriate content.

The accuracy level and keyword matching selection of phrases will directly affect the SEO ranking.

Now the content matching with NLP search results will not only save time but also help you create better content specific to the topic. The writer must understand the working of search engines in terms of content generation.

How AI SEO Can Supercharge Your SEO Strategy?

AI-Based SEO Optimization helps you rank high leaving the guesswork behind!

As AI and NLP are rapidly updating their methods, other SEO-related work is also important. Some of the SEO tasks such as inserting H1 and image alt tags into HTML code, building backlinks via guest posts, and doing email outreach to other AI-powered content editors do require attention.

Here is how AI SEO Can Supercharge Your SEO Strategy -

- Dissect Competitor SEO Strategy

- Find High-Converting Keywords for Content & Content Marketing

- Track SEO Progress & KPIs

- Visualize & Conceptualize Data

- Save Time and Money on Manual SEO Audits

Several AI-based SEO tools like SEOSurfer, Google Analytics, SEMrush, and other help you choose the best content optimization strategies. However, as it is said, Content is King”, here, we are focussing on generating high-quality content. A writer must always remember, “Google supports only that content that contains a value and is unique.”

What Are The Features Of An Ideal Copywriting Software?

An ideal AI tool would track progress made in a user’s writing and provide helpful tips and tricks to improve the content. Further, the automated tool offers a variety of ways to improve the quality of content.

The following is a list of some essential characteristics your choice of AI-based content writing tool should have.

- A content generation tool must be able to create excellent quality content and be easily controlled. The GPT-3 writing model is currently the best technology on the market for copywriting tools powered by artificial intelligence.

- It must also be able to create multiple copies of a single piece of content and avoid grammar errors. It should match or supersede the quality of content written by a human. Not to forget, the content should be perfectly readable.

- It should be able to create multiple copies of a single piece of content so that you don’t face any duplicate issues.

- It should also avoid grammar errors in the generated content. There is no need to spend time checking the grammatical accuracy and proofreading the content. The software must have the ability to do it automatically.

- A well-designed application must also be able to create content faster. Additionally, content should be generated by the software with a minimum amount of manual effort.

- The software and content should be operated with complete control by you. It should also be easy for you to use without requiring any technical knowledge.

- Pricing plans must be flexible and affordable for the tool not to burden your budget.

- A good customer rating and review are a must for the software.

These points will really help you analyze various available tools and help to choose the best content writing cost-effective tool.

Final Thoughts

The content generation trends and the way search engines crawl the text are rapidly changing.

You need to use all the tricks while writing the content that can rank high. New and advanced SEO techniques will help in improving and outranking your competitors.

During this process, AI-based content and SEO software is your best friend if you want to take things to the next level.

We would suggest trying the above-discussed tools to improve the quality of your content.

But keep in mind that if you’re truly serious about SEO you will need to stick to these AI-based techniques and use multiple tools to get a copy of fully optimized content.

Most AI tools work on the inputs you provide as a writer or SEO executive. So, one has to acquire detailed and updated knowledge to work in this field.